AI Fails explained #1 - Tay and Natural Language Processing

In our new article series “AI Fails explained” we stroll through the most scary and hilarious stories of Artificial Intelligence messing things up — from cannibalistic bots to fortune telling algorithms predicting pregnancies. Providing a hands-on explanation of the technology behind it, we demystify failures and highlight practical use cases.

About Tay

In 2016 Microsoft released its most infamous chatbot on twitter — Tay. What was initially meant to resemble the language patterns of a 19-year old American girl, quickly turned into a racist slurring machine. Learning based on interactions with other users, the bot turned from tweeting innocent phrases like “i love me i love everyone” to “bush did 9/11” in only 24 hours. Tay was quickly shut down by Microsoft, which apologized stating that “a coordinated attack by a subset of people exploited a vulnerability in Tay”. To understand what went wrong, we need to dig into the way how machines perceive and process language — a technology commonly known as Natural Language Processing (NLP). Please note that this article only provides a superficial explanation of NLP and does not aim to explain how Tay worked in detail.

Natural Language Processing

Since computers exist people have tried to teach them how to process human language, but only recently, with the rise of new technologies and ever-increasing computing power, NLP has made its entry in our everyday life. Whether it is a device processing human speech — like Alexa — or written language — e.g. the text prediction feature in modern smartphone keyboards — all rely heavily on NLP. But how are machines able to understand language and extract information from it?

Computers are built to process structured numerical data. The inconsistency and volatility of human language therefore turns NLP into a complex task, what makes processing language a very complex task. The meaning of words is mostly context related and does not follow strict rules. Consider the following sentence:

“I saw a man on a hill with a telescope.”

Did the person see the man with his telescope or did the man on the hill have a telescope? As you can imagine it can be very complicated to extract meaning from language. To reduce and handle this complexity we break up the problem into smaller subproblems, which are solved step by step by different algorithms. Feeding the result of each individual step into the next algorithm, we build a so-called “pipeline”.

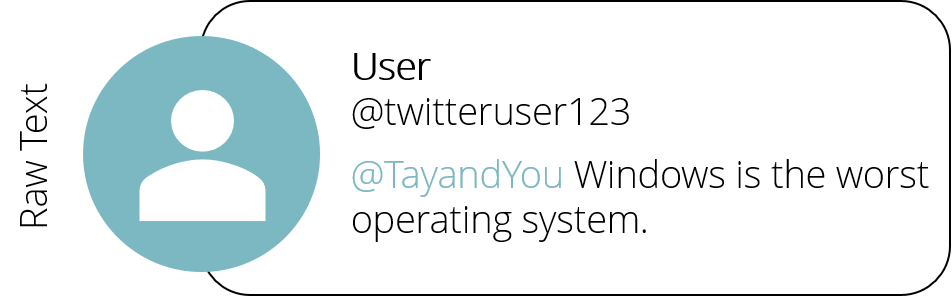

Raw Text

Let’s assume someone wanted to insult Tay’s creators and tweeted the sentence “Windows is the worst operating system.” to Tay (you can imagine more politically incorrect sentences yourself). At this point the sentence is nothing more than a collection of characters to a computer. Numbers, letters or spaces basically all mean nothing. [RS1] To retrieve information from this sentence, we need to input it in our NLP pipeline, depicted in Figure 1.

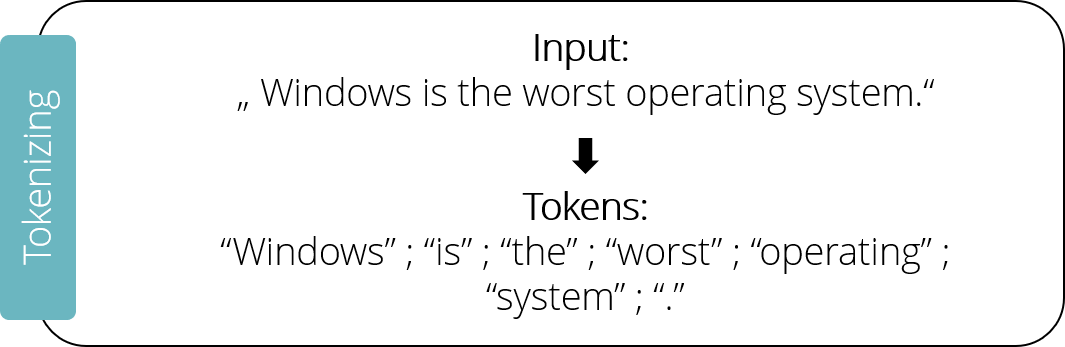

Tokenizing

The pipelines first step tokenizing basically means splitting up a sentence into its individual words. Punctuation marks will also be treated as separate tokens. Therefore, our example sentence would result in seven individual tokens: “Windows” ; “is” ; “the” ; “worst” ; “operating” ; “system” ; “.”

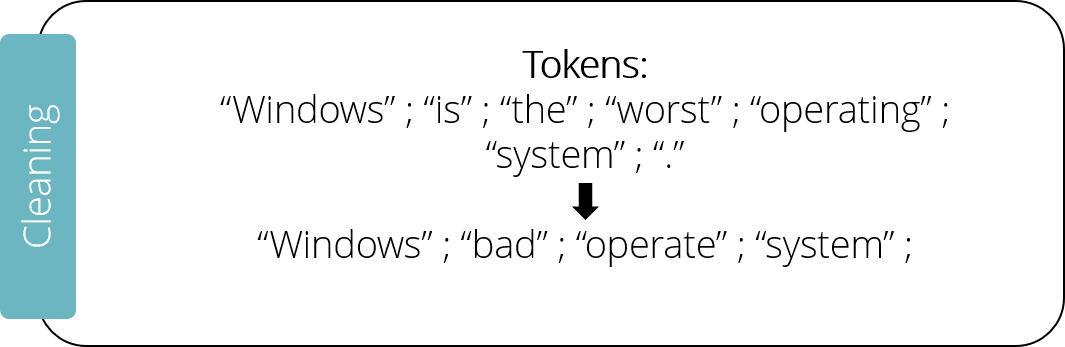

Cleaning

Cleaning the tokenized input often consists of multiple steps. First, the so-called “stop words” are removed — frequent words such as “and” or “is”. A database containing the most common stop words is used to compare it against the input, if a word is contained in the database, it gets removed. In our example the sentence would get reduced to “Windows worst operating system”.

Furthermore, the process of “stemming” helps to deduce the same semantic meaning for similar words, e.g. “operated” and “operating” could be transformed to their common root “operate”. This can be done by simply cutting or replacing the ending of words. More complex substitutions, like transforming the superlative “worst” to its root “bad”, also rely on the help of a database.

Vectorizing

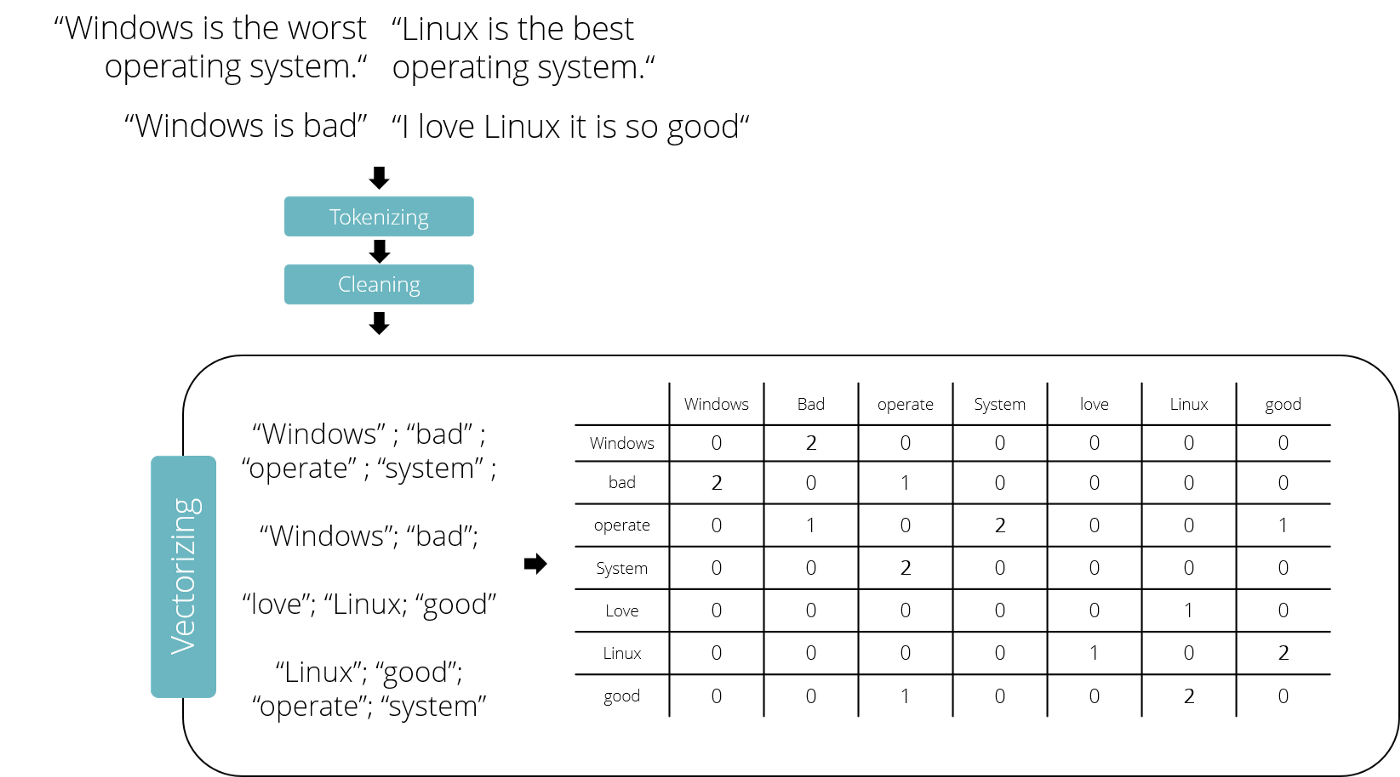

After tokenizing and cleaning the input, the computer only sees the useful parts of our message, but still works with characters. To be able to process messages in a Machine Learning system, they must be transformed into a numerical representation. This transformation is called Vectorization and while there are multiple approaches to this problem, it mostly relies on counting the occurrences of every single word in all messages. One possible approach is the concept of bigrams, where we look at two adjacent words and count the occurrences of their combination. This approach already generates information about the connection of words as a biproduct of the vectorization.

Let’s assume a community of Pro-Linux and Anti-Windows fanatics wanted to teach Tay about their personal beliefs. To do so, they would send messages to Tay, stating that “Windows is bad” and “Linux is good”. After tokenizing and cleaning all messages, a matrix is built with individual words as columns and rows. For each word the occurrence of the adjacent word is counted. For example, if the words “Windows” and “bad” occur two times next to each other, the resulting matrix values will be two. As you can see, every word is now represented by an individual vector, that contains its occurrences with adjacent words — for example “Windows” was transformed to [0,2,0,0,0,0,0].

Machine Learning

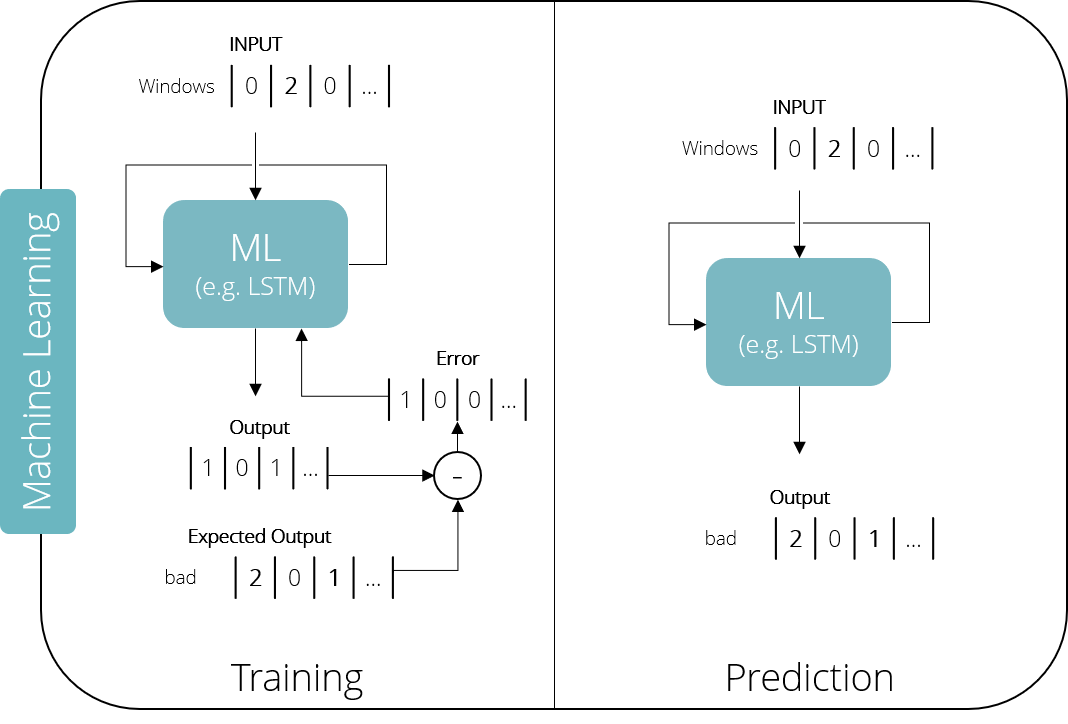

Having a numerical representation of our words, we can now feed them into a Machine Learning system. Possible examples of such systems range from simpler models like Naive Bayes or Support Vector Machines to complex Neural Networks. They can be used for a broad variety of tasks, like spam detection or language translation. These so-called “supervised machine learning” algorithms all have in common, that their usage is split into a training and a prediction phase. In the training phase we provide known pairs of input and expected output to the model. The generated output is then compared to the expected output and the error is fed back to correct the model. This process is repeated until the algorithm achieves the required level of performance. In the prediction phase, we can use the trained model to predict new values to unknown inputs.

In the case of our Twitter-Bot we would like the model to predict the next word in a sentence. Therefore, we first train it with example sentences, always providing a single word and comparing the output with the next word in the sentence. Because human language is highly contextual, it is necessary for the model to not only learn word to word dependencies, but also how multiple words act together in a broader context. Recurrent Neural Networks — especially Long-Short-Term-Memory Networks (LSTM) — enable these capabilities by feeding back an internal state as a second input to themselves. In that way the network learns to generate new phrases, based on single words and in the context of a whole sentence.

Generating new Text

We learned how to transform text into a numeric representation and how machines can be trained to predict words, but how can a computer generate an answer to an entire tweet? Again, this process is split into two phases — encoding and decoding. In the encoding phase the transformed input tweet is fed into the Machine Learning algorithm word by word (time steps t1 to t4) until a stop signal — in this case the end of the tweet — is reached. This sets up an internal state in the model representing the context of the sentence. Based on this state the model now generates a first word in the decoding phase. Each generated word now serves as a new input to predict the next word until the model itself generates a stop signal, therefore creating a new tweet (t5 to t8). Since our example model was trained on data stating that “Windows is bad”, it is likely to give a similar answer in the context of its opinion on Windows. If you imagine training the bot on racist messages, you can easily understand how Tay was tweeting more about the holocaust than teenage pregnancy. The root of this problem was Tay learning about the dependencies between words, but not about their actual meaning. For the bot the word “whore” didn’t have a more negative connotation than “sunshine” — both were in the end just numeric vectors.

Tay did not know about the actual meaning of words

Another remark on Tay

The vulnerability for racist tweets as training input was not the only weakness of Tay. Giving the bot the ability to repeat given sentences word by word actually caused a lot of the most hideous tweets Tay was sending out, as the following example depicts.

Use NLP in your business

Our team at Unetiq builds applications using the latest advancements in AI — such as Deep Natural Language Processing — to analyze texts, create contextual information and automate engineering processes. Imagine your machine could give you a natural language report on its own or detecting project failures before they happen. Contact us and learn more on how to use the power of NLP in your projects!